转载:https://wiki.pathmind.com/generative-adversarial-network-gan

转载:https://wiki.pathmind.com/

转载:https://zhuanlan.zhihu.com/p/42606381

转载:https://zhuanlan.zhihu.com/p/33752313 通俗理解GAN

笔者翻译自原文:A Beginner's Guide to Generative Adversarial Networks (GANs) ,不错的GAN入门材料。看完看完还不懂生成对抗网络GAN你咬我~哈哈哈哈哈哈哈哈哈

生成对抗网络(GANs) 是一种包含两个网络的深度神经网络结构,将一个网络与另一个网络相互对立(因此称为“对抗‘).

在2014年, GANs由Goodfellow 和蒙特利尔大学的其他研究者提出来,包括Yoshua Bengio,提及GANs, Yann LeCun 称对抗训练为“过去10年机器学习领域最有趣的idea”

GANs 的潜力巨大,因为它们能去学习模仿任何数据分布,因此,GANs能被教导在任何领域创造类似于我们的世界,比如图像、音乐、演讲、散文。在某种意义上,他们是机器人艺术家,他们的输出令人印象深刻,甚至能够深刻的打动人们。

In a surreal turn, Christie’s sold a portrait for $432,000 that had been generated by a GAN, based on open-source code written by Robbie Barrat of Stanford. Like most true artists, he didn’t see any of the money, which instead went to the French company, Obvious.0

In 2019, DeepMind showed that variational autoencoders (VAEs) could outperform GANs on face generation.

生成算法 VS 判别算法

为了理解GANs, 你需要知道生成算法是如何工作的,为此,我们最好拿判别算法与之进行对比。判别算法尝试去区分输入的数据,意思就是,给他们数据实例的特征,他们将预测这些数据所属的标签或者类别。

比如说,给它一封邮件的所有单词,判别算法能够判别这封邮件是否属于垃圾邮件。垃圾邮件是标签之一,从这封邮件中获取的所有单词(词袋)就组成了输入数据的特征。当以数学来表述这个问题,标签被称为y,特征被称为x 。 公式 p(y|x) 的意思是“给定x,y发生的概率”,在这个例子中可以翻译成“给定这些包含的单词,这封邮件是垃圾邮件的概率”。

因此,判别算法是将特征映射为标签,它们只关心这中相关性。一种去理解生成的方法是,它们所做的事情恰恰是相反的,生成并非是由给定特定的特征去预测标签,而是尝试由给定的标签去预测特征。

生成算法在竭力回答这样一个问题:假定这个邮件是垃圾邮件,那么这些特征应该是什么样的? 判别模型关心y和x的关系,但生成模型关心的是“你怎样得到x”, 它允许你获得p(x|y), “给定y,x发生的概率”,或者给定一个类,特征的概率。(也就是说,生成算法也能被用做一个分类器,但是它不仅仅做的是输入数据的分类)。

其他方式去区分生成和判别的方法可以如下:

- 判别模型是去学习类之间的界限

- 生成模型对某一类的分布进行建模

GANs是怎样工作的呢?

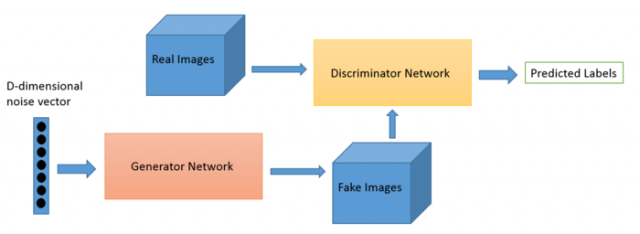

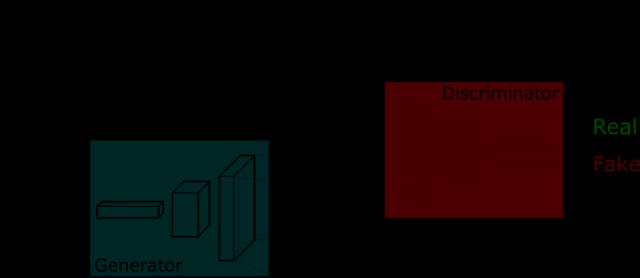

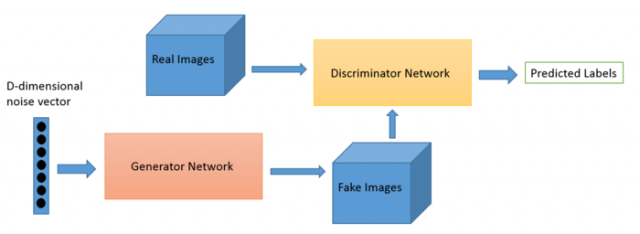

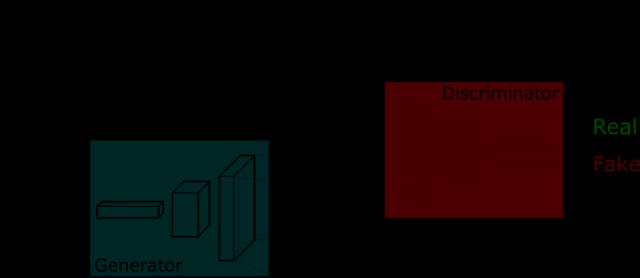

一个被称为生成器的神经网络生成新的数据实例,相对的,另一个被称为判别器的东西去评估他们的真实性;也就是说,判别器决定每一个它检验的数据实例是否属于真实的训练数据集。

让我们来谈论一下比模仿蒙娜丽莎更平庸的事,我们来生成一些手写数字,比如从现实世界里取到的MNIST数据集。判别器的目标是当给它们展示一个真正的MNIST数据集的实例,它能够识别这个实例是真实的。

与此同时,生成器正在创造新的图片来传到判别器。它们希望它们生成的图片被认为是真实的,即使它们是假的。生成器的目标是生成能够通过的手写数字,去欺骗那个傻乎乎的判别器而不被抓到。判别器的目标是鉴别来自生成器的图片是否是假的。

以下是GAN所采取的步骤:(“左右互博”)

- 生成器接收随机数然后返回一张图片

- 这张图片和真实数据集的图片流一起被送进了判别器

- 判别器接收真实的和假的图片然后返回概率,一个0-1之间的数字,1代表为真实的预测,0代表是假的

所以你会有一个双反馈循环:

- 判别器和图片的ground truth构成一个反馈循环

- 生成器和判别器构成一个反馈循环

你可以把GAN想象成猫鼠游戏中伪造者和警察的角色,伪造者在学习传递虚假票据,警察正在学习检测它们。双方都是动态的,也就是说,警察也是在训练(就像中央银行正在为泄漏的票据做标记),并且双方在不断升级中学习对方的方法。

判别器网络是一个标准的能够分类图片的卷积网络,是一个二分类器标记图片的真假。生成器网络是一个反卷积网络,在某种意义上讲,标准卷积分类器对图片进行下采样并切生成一个排律,生成器会生成随机噪声向量并将其上采样成一张图片。判别器网络是通过像maxpooling下采样丢弃数据,生成器则是生成新数据。

如果你想去更多的学习关于如何生成图片, Brandon Amos 写了一个非常的文章 interpreting images as samples from a probability distribution.

GANs、自编码器 和 VAEs

将GANs和其他神经网络做比较是非常有用的,比如自编码器和变分自编码器(VAEs)。

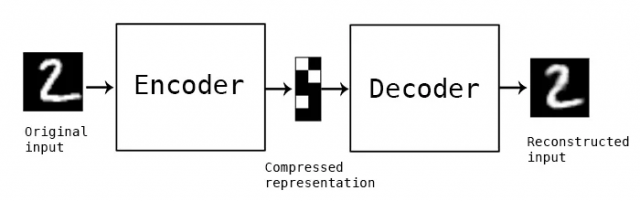

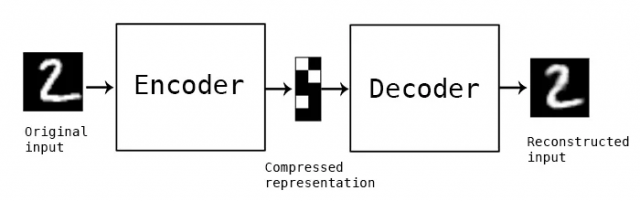

自编码器对输入数据编码成向量,它们创造对原始数据的隐藏、或是压缩表示。它们对降低维数很有用,也就是说,用作隐藏表示的向量将原始数据压缩成更少量的主要维度。自编码器能够和解码器共同存在,解码器允许你对基于隐藏表示的输入数据进行重构,就像需要用受限制玻尔兹曼机一样。

变分自编码器是一种生成算法,它对输入数据的编码添加了额外的限制,即隐藏表示被标准化。变分自编码器能够像自编码器一样压缩数据,也能像GAN一样合成数据。当然,GAN生成的数据是精细的,VAEs生成的数据更加模糊。Deeplearning4j的例子包含 自编码器和变分自编码器.

你可以将生成算法分为以下三类:

- 给定标签,预测相关特征(朴素贝叶斯)

- 给定隐藏表示,预测相关特征(VAE,GAN)

- 给定特征,预测其他(修复、插补)

训练GAN的技巧:

当你训练一个判别器,固定生成器的值不变;当你需验一个生成器,固定判别器的值不变。都应该训练对抗静态对手,比如,这使得生成器更好读取的必须学习的梯度。

处于同样的原因,训练生成器器之前先预训练MNIST的判别器将建立更清晰的梯度。

GAN的每一边都会压倒另一边,如果判别器太好,将会返回非常接近0或者1的数字,这样生成器去读取梯度时就很困难;如果生成器太好,它将持续利用判别器的弱点导致“漏报”(false negatives), 这可以通过网络各自的学习率来减轻。

GANs 的训练会花很长时间,单GPU训练GAN会花数个小时,单CPU会超过一天。虽然难以去调整和使用,但是GANs已经有了有趣的研究和写作。

上代码吧~

以下是是Keras编写的GAN代码:

class GAN():

def __init__(self):

self.img_rows = 28

self.img_cols = 28

self.channels = 1

self.img_shape = (self.img_rows, self.img_cols, self.channels)

optimizer = Adam(0.0002, 0.5)

# Build and compile the discriminator

self.discriminator = self.build_discriminator()

self.discriminator.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

# Build and compile the generator

self.generator = self.build_generator()

self.generator.compile(loss='binary_crossentropy', optimizer=optimizer)

# The generator takes noise as input and generated imgs

z = Input(shape=(100,))

img = self.generator(z)

# For the combined model we will only train the generator

self.discriminator.trainable = False

# The valid takes generated images as input and determines validity

valid = self.discriminator(img)

# The combined model (stacked generator and discriminator) takes

# noise as input => generates images => determines validity

self.combined = Model(z, valid)

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

def build_generator(self):

noise_shape = (100,)

model = Sequential()

model.add(Dense(256, input_shape=noise_shape))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(1024))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(np.prod(self.img_shape), activation='tanh'))

model.add(Reshape(self.img_shape))

model.summary()

noise = Input(shape=noise_shape)

img = model(noise)

return Model(noise, img)

def build_discriminator(self):

img_shape = (self.img_rows, self.img_cols, self.channels)

model = Sequential()

model.add(Flatten(input_shape=img_shape))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(1, activation='sigmoid'))

model.summary()

img = Input(shape=img_shape)

validity = model(img)

return Model(img, validity)

def train(self, epochs, batch_size=128, save_interval=50):

# Load the dataset

(X_train, _), (_, _) = mnist.load_data()

# Rescale -1 to 1

X_train = (X_train.astype(np.float32) - 127.5) / 127.5

X_train = np.expand_dims(X_train, axis=3)

half_batch = int(batch_size / 2)

for epoch in range(epochs):

# ---------------------

# Train Discriminator

# ---------------------

# Select a random half batch of images

idx = np.random.randint(0, X_train.shape[0], half_batch)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (half_batch, 100))

# Generate a half batch of new images

gen_imgs = self.generator.predict(noise)

# Train the discriminator

d_loss_real = self.discriminator.train_on_batch(imgs, np.ones((half_batch, 1)))

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, np.zeros((half_batch, 1)))

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# Train Generator

# ---------------------

noise = np.random.normal(0, 1, (batch_size, 100))

# The generator wants the discriminator to label the generated samples

# as valid (ones)

valid_y = np.array([1] * batch_size)

# Train the generator

g_loss = self.combined.train_on_batch(noise, valid_y)

# Plot the progress

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

# If at save interval => save generated image samples

if epoch % save_interval == 0:

self.save_imgs(epoch)

def save_imgs(self, epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, 100))

gen_imgs = self.generator.predict(noise)

# Rescale images 0 - 1

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

axs[i,j].axis('off')

cnt += 1

fig.savefig("gan/images/mnist_%d.png" % epoch)

plt.close()

if __name__ == '__main__':

gan = GAN()

gan.train(epochs=30000, batch_size=32, save_interval=200)

一些生成网络的资源

GAN Use Cases

Notable Papers on GANs

- [Generative Adversarial Nets] [Code](Ian Goodfellow’s breakthrough paper)

Unclassified Papers & Resources

- GAN Hacks: How to Train a GAN? Tips and tricks to make GANs work

- Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks] [Code]

- [Adversarial Autoencoders] [Code]

- [Generating Images with Perceptual Similarity Metrics based on Deep Networks] [Paper]

- [Generating images with recurrent adversarial networks] [Code]

- [Generative Visual Manipulation on the Natural Image Manifold] [Code]

- [Learning What and Where to Draw] [Code]

- [Adversarial Training for Sketch Retrieval] [Paper]

- [Generative Image Modeling using Style and Structure Adversarial Networks] [Code]

- [Generative Adversarial Networks as Variational Training of Energy Based Models] [Paper](ICLR 2017)

- [Synthesizing the preferred inputs for neurons in neural networks via deep generator networks] [Code]

- [SalGAN: Visual Saliency Prediction with Generative Adversarial Networks] [Code]

- [Adversarial Feature Learning] [Paper]

Generating High-Quality Images

- [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks] [Code](Gan with convolutional networks)(ICLR)

- [Generative Adversarial Text to Image Synthesis] [Code]

- [Improved Techniques for Training GANs] [Code](Goodfellow’s paper)

- [Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space] [Code]

- [StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks] [Code]

- [Improved Training of Wasserstein GANs] [Code]

- [Boundary Equibilibrium Generative Adversarial Networks Implementation in Tensorflow] [Code]

- [Progressive Growing of GANs for Improved Quality, Stability, and Variation ] [Code]

Semi-supervised learning

- [Adversarial Training Methods for Semi-Supervised Text Classification] [Note]( Ian Goodfellow Paper)

- [Improved Techniques for Training GANs] [Code](Goodfellow’s paper)

- [Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks] [Paper](ICLR)

- [Semi-Supervised QA with Generative Domain-Adaptive Nets] [Paper](ACL 2017)

Ensembles

- [AdaGAN: Boosting Generative Models] [Paper][[Code]](Google Brain)

Clustering

- [Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks] [Paper](ICLR)

Image blending

- [GP-GAN: Towards Realistic High-Resolution Image Blending] [Code]

Image Inpainting

- [Semantic Image Inpainting with Perceptual and Contextual Losses] [Code](CVPR 2017)

- [Context Encoders: Feature Learning by Inpainting] [Code]

- [Semi-Supervised Learning with Context-Conditional Generative Adversarial Networks] [Paper]

- [Generative face completion] [Code](CVPR2017)

- [Globally and Locally Consistent Image Completion] [MainPAGE](SIGGRAPH 2017)

Joint Probability

- [Adversarially Learned Inference][Code]

Super-Resolution

- [Image super-resolution through deep learning ][Code](Just for face dataset)

- [Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network] [Code](Using Deep residual network)

- [EnhanceGAN] Docs[[Code]]

De-occlusion

- [Robust LSTM-Autoencoders for Face De-Occlusion in the Wild] [Paper]

Semantic Segmentation

- [Adversarial Deep Structural Networks for Mammographic Mass Segmentation] [Code]

- [Semantic Segmentation using Adversarial Networks] [Paper](Soumith’s paper)

Object Detection

- [Perceptual generative adversarial networks for small object detection] [Paper](CVPR 2017)

- [A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection] [Code](CVPR2017)

RNN-GANs

- [C-RNN-GAN: Continuous recurrent neural networks with adversarial training] [Code]

Conditional Adversarial Nets

- [Conditional Generative Adversarial Nets] [Code]

- [InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets] [Code]

- [Conditional Image Synthesis With Auxiliary Classifier GANs] [Code](GoogleBrain ICLR 2017)

- [Pixel-Level Domain Transfer] [Code]

- [Invertible Conditional GANs for image editing] [Code]

- [Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space] [Code]

- [StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks] [Code]

-

Goodfellow et al

Video Prediction & Generation

- [Deep multi-scale video prediction beyond mean square error] [Code](Yann LeCun’s paper)

- [Generating Videos with Scene Dynamics] [Code]

- [MoCoGAN: Decomposing Motion and Content for Video Generation] [Paper]

Texture Synthesis & Style Transfer

- [Precomputed real-time texture synthesis with markovian generative adversarial networks] [Code](ECCV 2016)

Image Translation

- [Unsupervised cross-domain image generation] [Code]

- [Image-to-image translation using conditional adversarial nets] [Code]

- [Learning to Discover Cross-Domain Relations with Generative Adversarial Networks] [Code]

- [Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks] [Code]

- [CoGAN: Coupled Generative Adversarial Networks] [Code](NIPS 2016)

- [Unsupervised Image-to-Image Translation with Generative Adversarial Networks] [Paper]

- [Unsupervised Image-to-Image Translation Networks] [Paper]

- [Triangle Generative Adversarial Networks] [Paper]

GAN Theory

- [Energy-based generative adversarial network] [Code](Lecun paper)

- [Improved Techniques for Training GANs] [Code](Goodfellow’s paper)

- [Mode Regularized Generative Adversarial Networks] [Paper](Yoshua Bengio , ICLR 2017)

- [Improving Generative Adversarial Networks with Denoising Feature Matching] [Code](Yoshua Bengio , ICLR 2017)

- [Sampling Generative Networks] [Code]

- [How to train Gans] [Docu]

- [Towards Principled Methods for Training Generative Adversarial Networks] [Paper](ICLR 2017)

- [Unrolled Generative Adversarial Networks] [Code](ICLR 2017)

- [Least Squares Generative Adversarial Networks] [Code](ICCV 2017)

- [Wasserstein GAN] [Code]

- [Improved Training of Wasserstein GANs] [Code](The improve of wgan)

- [Towards Principled Methods for Training Generative Adversarial Networks] [Paper]

- [Generalization and Equilibrium in Generative Adversarial Nets] [Paper](ICML 2017)

3-Dimensional GANs

- [Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling] [Code](2016 NIPS)

- [Transformation-Grounded Image Generation Network for Novel 3D View Synthesis] [Web](CVPR 2017)

Music

- [MidiNet: A Convolutional Generative Adversarial Network for Symbolic-domain Music Generation using 1D and 2D Conditions] [HOMEPAGE]

Face Generation & Editing

- [Autoencoding beyond pixels using a learned similarity metric] [Tensorflow code]

- [Coupled Generative Adversarial Networks] [Tensorflow Code](NIPS)

- [Invertible Conditional GANs for image editing] [Code]

- [Learning Residual Images for Face Attribute Manipulation] [Code](CVPR 2017)

- [Neural Photo Editing with Introspective Adversarial Networks] [Code](ICLR 2017)

- [Neural Face Editing with Intrinsic Image Disentangling] [Paper](CVPR 2017)

- [GeneGAN: Learning Object Transfiguration and Attribute Subspace from Unpaired Data ] [Code]

- [Beyond Face Rotation: Global and Local Perception GAN for Photorealistic and Identity Preserving Frontal View Synthesis] [Paper](ICCV 2017)

For Discrete Distributions

- [Maximum-Likelihood Augmented Discrete Generative Adversarial Networks] [Paper]

- [Boundary-Seeking Generative Adversarial Networks] [Paper]

- [GANS for Sequences of Discrete Elements with the Gumbel-softmax Distribution] [Paper]

Improving Classification & Recognition

- [Generative OpenMax for Multi-Class Open Set Classification] [Paper](BMVC 2017)

- [Controllable Invariance through Adversarial Feature Learning] [Code](NIPS 2017)

- [Unlabeled Samples Generated by GAN Improve the Person Re-identification Baseline in vitro] [Code] (ICCV2017)

- [Learning from Simulated and Unsupervised Images through Adversarial Training] [Code](Apple paper, CVPR 2017 Best Paper)

Projects

- [cleverhans] [Code](A library for benchmarking vulnerability to adversarial examples)

- [reset-cppn-gan-tensorflow] [Code](Using Residual Generative Adversarial Networks and Variational Auto-encoder techniques to produce high-resolution images)

- [HyperGAN] [Code](Open source GAN focused on scale and usability)

Tutorials