今天心血来潮,想把传统的卷积算法实现一份不采用各种加速方式,仅优化算法逻辑的纯净版本。

写完发现性能还可以,特发出来分享之,若有博友在此基础上,进行了再次优化,那就更赞了。

算法很简单:

inline unsigned char Clamp2Byte(int n) {

return (((255 - n) >> 31) | (n & ~(n >> 31)));

}

void Convolution2D(unsigned char * data, unsigned int width, unsigned int height, unsigned int channels, int * filter, unsigned char filterW, unsigned char cfactor, unsigned char bias) {

unsigned char * tmpData = (unsigned char * ) malloc(width * height * channels);

int factor = 256 / cfactor;

int halfW = filterW / 2;

if (channels == 3 || channels == 4) {

for (int y = 0; y < height; y++) {

int y1 = y - halfW + height;

for (int x = 0; x < width; x++) {

int x1 = x - halfW + width;

int r = 0;

int g = 0;

int b = 0;

unsigned int p = (y * width + x) * channels;

for (unsigned int fx = 0; fx < filterW; fx++) {

int dx = (x1 + fx) % width;

int fidx = fx * (filterW);

for (unsigned int fy = 0; fy < filterW; fy++) {

int pos = (((y1 + fy) % height) * width + dx) * channels;

int * pfilter = & filter[fidx + (fy)];

r += data[pos] * ( * pfilter);

g += data[pos + 1] * ( * pfilter);

b += data[pos + 2] * ( * pfilter);

}

}

tmpData[p] = Clamp2Byte(((factor * r) >> 8) + bias);

tmpData[p + 1] = Clamp2Byte(((factor * g) >> 8) + bias);

tmpData[p + 2] = Clamp2Byte(((factor * b) >> 8) + bias);

}

}

} else

if (channels == 1) {

for (int y = 0; y < height; y++) {

int y1 = y - halfW + height;

for (int x = 0; x < width; x++) {

int r = 0;

unsigned int p = (y * width + x);

int x1 = x - halfW + width;

for (unsigned int fx = 0; fx < filterW; fx++) {

int dx = (x1 + fx) % width;

int fidx = fx * (filterW);

for (unsigned int fy = 0; fy < filterW; fy++) {

int pos = (((y1 + fy) % height) * width + dx);

int szfilter = filter[fidx + (fy)];

r += data[pos] * szfilter;

}

}

tmpData[p] = Clamp2Byte(((factor * r) >> 8) + bias);

}

}

}

memcpy(data, tmpData, width * height * channels);

free(tmpData);

}

调用例子:

例子

//模糊

int Blurfilter[25] = {

0, 0, 1, 0, 0,

0, 1, 1, 1, 0,

1, 1, 1, 1, 1,

0, 1, 1, 1, 0,

0, 0, 1, 0, 0,

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, Blurfilter, 5, 13, 0);

// 运动模糊

int MotionBlurfilter[81] = {

1, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1, 0, 0, 0, 0, 0,

0, 0, 0, 0, 1, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1, 0, 0,

0, 0, 0, 0, 0, 0, 0, 1, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, MotionBlurfilter, 9, 9, 0);

//边缘探测1

int edges1filter[25] = {

-1, 0, 0, 0, 0,

0, -2, 0, 0, 0,

0, 0, 6, 0, 0,

0, 0, 0, -2, 0,

0, 0, 0, 0, -1,

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, edges1filter, 5, 1, 0);

//边缘探测2

int edges2filter[9] = {

-1, -1, -1, -1, 8, -1, -1, -1, -1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, edges2filter, 3, 1, 0);

//锐化1

int sharpen1filter[9] = {

-1, -1, -1, -1, 9, -1, -1, -1, -1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, sharpen1filter, 3, 1, 0);

//锐化2

int sharpen2filter[25] = {

-1, -1, -1, -1, -1, -1, 2, 2, 2, -1, -1, 2, 8, 2, -1, -1, 2, 2, 2, -1, -1, -1, -1, -1, -1,

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, sharpen2filter, 5, 8, 0);

//锐化3

int sharpen3filter[9] = {

1, 1, 1,

1, -7, 1,

1, 1, 1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, sharpen3filter, 3, 1, 0);

// 浮雕1

int Embossfilter[9] = {

-1, -1, 0, -1, 0, 1,

0, 1, 1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, Embossfilter, 3, 1, 128);

// 浮雕2

int emboss2filter[25] = {

-1, -1, -1, -1, 0, -1, -1, -1, 0, 1, -1, -1, 0, 1, 1, -1, 0, 1, 1, 1,

0, 1, 1, 1, 1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, emboss2filter, 5, 1, 128);

// 均值模糊1

int meanfilter[9] = {

1, 1, 1,

1, 1, 1,

1, 1, 1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, meanfilter, 3, 9, 0);

// 均值模糊2

int mean2filter[81] = {

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, mean2filter, 9, 81, 0);

博主在一张大小为960x1280的图片,进行了边缘探测卷积核的处理,在博主机子上耗时是100毫秒。

//边缘探测1

int edges1filter[25] = {

-1, 0, 0, 0, 0,

0, -2, 0, 0, 0,

0, 0, 6, 0, 0,

0, 0, 0, -2, 0,

0, 0, 0, 0, -1,

};

Convolution2D(imgData, imgWidth, imgHeight, imgChannels, edges1filter, 5, 1, 0);

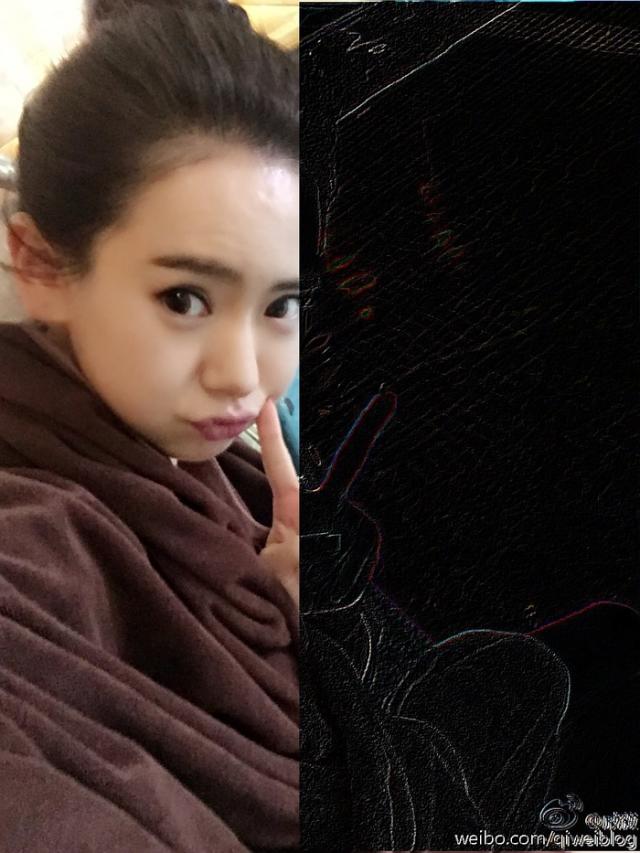

效果图:

其他相关资料,见各种百科网站。

关键词:卷积(英语:Convolution)

本文链接:http://task.lmcjl.com/news/12240.html